Conventional computers were originally designed to automate laborious mathematical operations by executing deterministic and accurate algorithms, i.e. by repeatedly applying (simple) operations to data. The computational complexity of a problem measures, how efficiently it can be broken down into such smaller operations in relation to the amount of data to be processed – i.e. a program’s complexity can scale logarithmically, linearly, polynomically, etc. with the size of the data set. Unfortunately, many highly relevant problems, such as many optimization problems, physics simulations, Bayesian inference, protein folding, etc., fall into a complexity class, where a linear increase in the size, precision or dimensionality of the data-set comes at an exponential cost (or worse) in terms of time or computing resources. In such cases, heuristic or non-deterministic methods can often find sufficiently precise or accurate results using much fewer resources. Such methods might not yield provably optimal results, or they might yield different results every time they are run, but each of these results (or their joint statistics) approximates the desired result. This can be a worthwhile trade-off, in particular if the problem to be solved is inherently stochastic, such as thermodynamic or quantum-systems, if the solution only needs to be accurate or optimal to some degree, or if no efficient algorithm is known, at all.

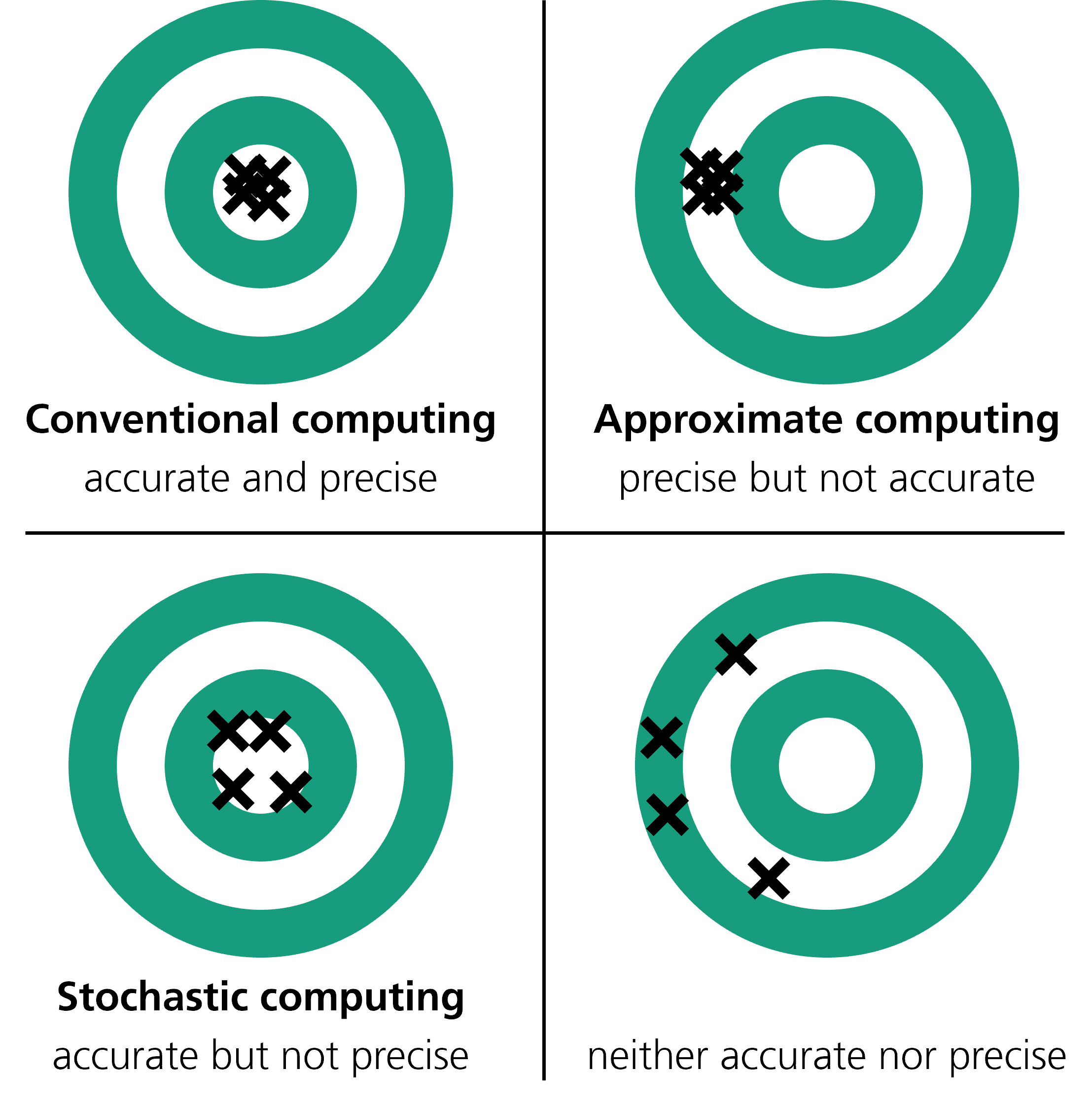

While a strict classification is difficult (indeed, even conventional algorithms often only approximate optimal results to some finite precision), there are several different “flavors” of such computing approaches.

Approximate Computing

Approximate computing recognizes that in many cases a lower range of computational accuracy is acceptable in order to save time, design space and energy. The approach employs deterministic hardware designs so it does not rely on assumptions about the stochastic nature of underlying processes and creates precise but inaccurate results. Approximate computing is applied throughout the technology design stack, from devices over circuits and architectures to software.

E.g. The number π can be approximated by Leibniz formula (π/4 = 1 – 1/3 + 1/5 – 1/7 +…) which will always give π=3.041839… when using 10 terms. This makes the method precise but not accurate.

Stochastic Computing

In stochastic computing the input data represent a probability instead of real numbers. The information is encoded as ‘stochastic number’ over the frequentness of a bit in time. For example when using binary numbers (based on bits ‘0’ and ‘1’), the bit sequence 10101000 is read as 0.375 by reading out the relation of three ‘1’s to eight digits (3/8=0.375). The classical meaning of the number (in the example a decimal 296) is not of importance. The probability does only depend on the relative amount of ‘1’s but neither on the order of ‘1’s and ‘0’s nor on the length of the bit sequence. This makes the number format robust against random bit flips by noise or radiation and against skew in the arrival time of the inputs. Higher precision is generated by simply extending the randomized bit sequence, but the benefit of this decreases exponentially.

This approach is non-deterministic and thus imprecise even though the circuit can be deterministic. In the example of above this means that when randomly placing points in a square that contains a circle of same height, the chance to land in the circle is roughly π/4, but each repetition will create randomly different numbers close to π/4. This makes the method accurate but not precise.

Some logic operations can be implemented very efficient by stochastic computing. For example multiplication can be performed by sending the bits sequentially through a single AND gate. However, other operations are more complicated to realize.

Limitations of stochastic computing arise from the fact that independently generated, uncorrelated random numbers are necessary. The hardware effort for generating these numbers can be significant. Also, an operation on numbers that originate from calculations applied on one shared original number, will not create correct results because the stochastic numbers are not independent.

Probabilistic Computing

Probabilistic computing exploits the intrinsic probabilistic behavior of a circuit or device to create true random numbers. The approach is often based on the stochastic behavior of a binary switch that create so called p-bits whose randomness of ‘1’ and ‘0’ is tuneable. Those device are more compact compared to digital random number generators, consume less energy and truly create random numbers from thermal noise or other physical events.

Quantum Computing and Annealing

Quantum Computing is based on the quantum properties of an ensemble of (artificial) quantum particles. Based on the laws of quantum mechanics, it is impossible to observe their full state directly. More definitely, a quantum mechanical measurement process is required which returns just one specific state with its corresponding probability, although the full state might be a superposition of several states. In order to obtain reasonable results, which are the different states and their probability, a large number of repetitions is required, so quantum computing itself belongs to the group of stochastic computing approaches.

This holds for universal quantum computing as well as for quantum annealing. In the former case of universal quantum computing, the final state is deterministically obtained by the application of quantum gates to the qubits (for more details see Quantum Computing). Thus, by increasing the number of repetitions the desired result can be improved to (in principle) arbitrary precision. In the case of quantum annealing, the system is first prepared in a simple initial state and then evolves over time to the full problems eigenstate, while staying in the ground state, the state with lowest energy (for more details see Adiabatic Computing). In this case quantum effects like tunneling become relevant, such that reaching the correct final state is itself a probabilistic process. Therefore, several repetitions are required to obtain a reasonable probability for the lowest energetic state.

Literature

| Title | Author | Year |

| A Survey of Techniques for Approximate Computing | S. Mittal | 2016 |

| Approximate computing: An emerging paradigm for energy-efficient design | J. Han, M. Orshansky | 2013 |

| Survey of Stochastic-Based Computation Paradigms | M. Alawad, M. Lin | 2016 |

| Introduction to Stochastic Computing and its Challenges | J. P. Hayes | 2015 |