Neuromorphic Computing is the multi-disciplinary effort to build sensors, processors and algorithms based on principles observed in neurobiology. In particular, most neuromorphic systems are designed according to the special requirements of neural network models: Neural networks are composed of many individually simple neurons that all operate in parallel and communicate only via direct synaptic connections. Likewise, neuromorphic systems typically feature many simple processing elements that run concurrently and communicate via point-to-point connections instead of shared memory. These basic features, which mimic the morphology of biological neural systems, give the field its name and distinguish it from conventional general-purpose computer architectures.

Many approaches under the umbrella of neuromorphic computing take the biological inspiration further, e.g. by implementing the behavior of individual neurons with dedicated analog electronic circuits, by incorporating hardware support for biologically plausible learning algorithms, and/or by only using asynchronous event-based communication. Others focus on specific engineering challenges such as improving density and power efficiency through new materials, memory technologies, fabrication methods, circuit design techniques and system architectures. Due to these different objectives and the many disciplines involved, neuromorphic computing is a heterogeneous field, with products at various levels of maturity ranging from early-stage research and development to first commercial products.

Practical Realization

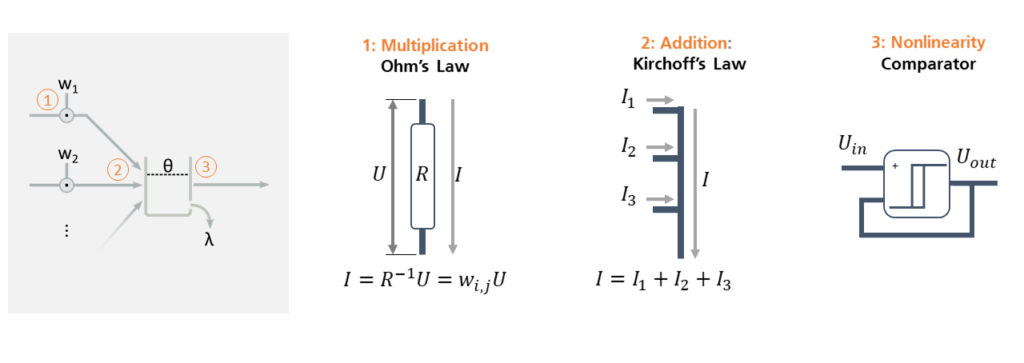

Although a concise definition of neuromorphic computing is difficult, the hardware acceleration of (some class of) neural networks is one of its most distinguishing features. Unlike conventional computers, which manipulate symbols in memory according to an arbitrary sequence of instructions (a “program”), neuromorphic systems typically perform computations on the input data by simulating a neural network, the parameters of which determine the performed operation. Neuromorphic hardware, therefore, does not typically use the conventional von-Neumann architecture with dedicated arithmetic logic units, program memory and main memory; instead, memory for storage of network parameters is often distributed across many computational elements or cores, each of which operates in parallel and communicates with other cores via package- or circuit-switched point-to-point connections. In most instances, these computational cores correspond to single neurons or small sets of neurons, which may be implemented by dedicated analog or digital electronic circuits, photonic or other non-electronic circuits, or specialized processors that execute micro-code to simulate the behavior of neurons.

Different approaches to neuromorphic computing promise different benefits: One of the earliest strands of research investigates the use of novel materials and devices, e.g. floating gate transistors, memristive devices or other non-volatile memory, to implement specific components of neural networks, e.g. synapses, efficiently and compactly. Another approach uses novel analog / mixed-signal circuits to implement the behavior of neurons and synapses efficiently in standard CMOS. To cope with the inevitable noise and device-to-device variations of analog circuits, some focus on specific neural network architectures that are inherently more robust to these imperfections, e.g. spiking neural networks. Other concepts, e.g. low precision computing, high-/hyper-dimensional computing or vector-symbolic architectures, use a high degree of redundancy to counteract the low precision of individual neurons and synapses. A yet more radical approach, reservoir computing, even uses the variability of the neuromorphic circuits to provide diverse dynamics that can be exploited for computation.

Since neuromorphic circuits are custom-built for the acceleration of neural networks, they can achieve unparalleled efficiency in terms of area use, power consumption, latency and throughput at the price of higher non-recurring engineering costs and reduced flexibility when compared to standard processors. Therefore, suitable application areas of neuromorphic computing include signal processing and data-fusion in sensors and sensor networks, audio- and video-processing for event-based sensors, and AI in (ultra-)low-power systems such as embedded, implanted or autonomous systems.

| Title | Author | Year |

| Bottom-Up and Top-Down Approaches for the Design of Neuromorphic Processing Systems: Tradeoffs and Synergies Between Natural and Artificial Intelligence | C. Frenkel; D. Bol; G. Indiveri | 2023 |

| 2022 roadmap on neuromorphic computing and engineering | D. V. Christensen et al. | 2022 |

| Opportunities for neuromorphic computing algorithms and applications | C. D. Schuman et al. | 2022 |

| Analog VLSI and neural systems | C. Mead | 1989 |